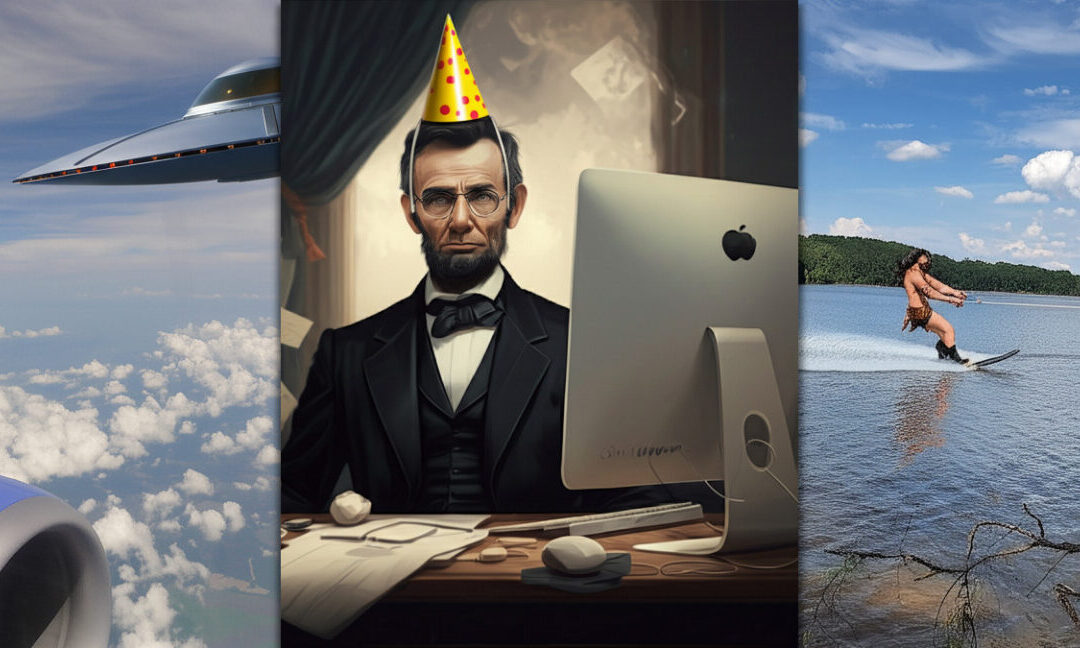

Google has recently expanded access to its experimental AI model, Gemini 2.0 Flash, which offers native image-generation capabilities. The technology, which integrates both text and image processing, was previously only available to testers but is now accessible via Google AI Studio. Among its abilities, Gemini 2.0 Flash can add or remove objects from images, modify scenery, alter lighting, and even attempt to change image angles and zoom levels. However, its effectiveness varies depending on the subject, style, and specific image.

The model was trained on a large dataset of images and text, with its understanding of images and world concepts from text sources occupying the same neural network space. This enables it to output image tokens, which are then converted back into images for the user.

The integration of image generation into an AI chat is not a new concept, with OpenAI and other tech companies having previously incorporated this feature. However, what sets Gemini 2.0 Flash apart is that it acts as both a large language model (LLM) and an AI image generator within a single system.

OpenAI’s GPT-4o also has the capability for native image output, but the company has not yet released a true multimodal image output capability.